Ingest Tool

The Ingest Tool can be accessed from the Optic Toolbar:

Use the Ingest Tool to load structured data in CSV, JSON, or JSONL format.

Synapse users can leverage the Ingest Tool to load one-off structured data into Synapse on demand.

Synapse developers can use the Ingest Tool to prototype and test Storm that may eventually be used as a Storm service or Power-Up.

The basic steps for loading data with the Ingest Tool are the same in all cases:

Create a new ingest.

Specify the source of the data to ingest and load the source file. The source can be:

A URL hosting the content.

A local file that you upload.

Review and optionally edit the source data.

Provide an ingest script (using Storm) that tells Synapse how to load the data.

Test your ingest.

Run the ingest to load the data.

Tip

Before you ingest your data, you should:

verify that you know what the fields and values in your source data represent; and

decide how you want to use the data to create objects in Synapse.

That is, how does your source data map into Synapse’s data model (nodes, properties, and tags)?

Create a New Ingest

Note

We strongly encourage you to fork a view prior to creating and testing your ingest. This isolates your test data from your production environment and allows you to discard your test data later. When your testing is complete, you can ingest your data into a “clean” fork, check it (again!) and then merge the data into production.

In the Ingest Tool, click the + New Ingest button.

In the New Ingest dialog, in the Name field, enter a name for your ingest.

Follow the instructions below depending on whether you are uploading a file or retrieving a file from a URL.

Tip

If you are loading a CSV file, verify whether or not the file contains a header row before proceeding.

Load a File from Local Disk

Click the Select a file button to upload a file from disk.

Select the file you wish to upload.

Synapse should automatically recognize the file type. If not, you can specify the file type using the File… drop down menu.

If the file is a CSV file, use the header row toggle to indicate whether the CSV file has a header row (green) or not (gray).

Click the Upload button to upload your file.

Load a File from a URL

In the Enter a URL… field, enter the URL for the file you want to ingest.

Use the File… drop down list to select the type of file you are downloading.

If the file is a CSV file, use the header row toggle to indicate whether the CSV file has a header row (green) or not (gray).

Click the Upload button to retrieve your file from the specified URL.

Change the Ingest Tool Layout

You can adjust the layout of the Ingest Tool to provide more (or less) room for the file preview, Storm editor, and ingest output windows.

Show or Hide File Preview

Toggle the arrow next to the file preview header to show or hide the preview:

Resize the File Preview

Click and drag the horizontal line between the file preview and the Storm editor and ingest output windows to resize these sections:

Resize the Storm Editor and Ingest Output

Click and drag the vertical lines between the Storm editor and ingest output to resize these windows:

Review and Edit Your Data

Tip

If you do not need to review or modify your data, skip to Add Your Storm Code to Ingest the File.

Edit Your JSON File

The content of a JSON file will be displayed in a Storm editor window in the file preview section.

Make any changes in the file preview window:

The Ingest Tool will automatically save any changes.

Edit Your CSV or JSONL File

The content of CSV and JSONL files will be displayed as a set of rows in the file preview section. CSV and JSONL data can both be reviewed and edited in the same way.

Tip

In a JSONL file, each row is a single, editable field. In a CSV file, each column is individually editable.

In the file preview section, use the left ( < ) and right ( > ) arrows to page through the rows of loaded data:

To jump to a specific row, edit the row to start at field:

You can press Tab, Enter, or click anywhere outside the row to start at field to save your changes.

Tip

When you jump to a row to start at, the rows to process field will automatically update to reflect the number of remaining rows. You can modify one or both fields when you are ready to test your ingest and load the data.

To edit the data, double-click the field you want to modify. Make any edits and press Enter to save your changes:

To remove a row, click the X to the left of the row.

Add Your Storm Code to Ingest the File

In the Storm editor window, enter the Storm that you will use to process your file:

Synapse will automatically save changes as you make them - you will see a green check mark briefly appear in the upper right corner of the Storm editor window.

Tip

For additional tips and example Storm ingest code, see the Ingest Examples below.

Test Your Ingest

Note

We strongly recommend testing your ingest in a forked view.

Click the Run ingest button (the “play” button) or press Shift-Enter to run your Storm ingest code and test your ingest:

Any status messages, warnings, or errors will appear in the ingest output window.

Tip

For large CSV or JSONL files, you can test your ingest on a subset of rows by editing the row to start at and rows to process fields:

Tip

You can use Shift-Enter to run a query in many of the multi-line editors in Optic, including in the Storm Editor Tool, Ingest Tool, and the Bookmark Manager.

If your Storm ingest runs without errors, you can use the Research Tool to view your newly created nodes to ensure they were created as expected.

Run Your Ingest

Note

We strongly recommend running your ingest in a forked view, and then merging the loaded data into your production layer. This is a “best practice” that gives you one more opportunity to review the data before adding it to production.

Click the Run ingest button (the “play” button) or press Shift-Enter to run your Storm ingest code:

Tip

For CSV or JSONL files, be sure to reset the row to start at and rows to process fields if necessary:

You can use the Research Tool to view and verify your newly created nodes.

Ingest Examples

The Storm ingest examples below illustrate some of the basic concepts and processes for ingesting CSV, JSON, and JSONL data. The sample Storm is heavily commented; ideally, users who are familiar with Storm (but who may not have a programming background) can use these examples as the basis for writing their own ingest without needing to deep dive into more advanced Storm concepts.

Note

The examples below represent basic ingest scripts suitable for one-time or limited use, and are meant to illustrate common ingest features in a way that is accessible to general users. These features include: the use of variables; how to reference values (columns, name-value pairs) in a source file; simple use of control flow elements like for loops or switch cases; guid generation; and node creation.

The examples are valid (they correctly load the source data), but are not meant to represent robust, reusable Storm (such as might be used to ingest more complex data, for recurring ingest from a particular source, or as the basis of production code such as a custom Power-Up).

Users or developers writing ingest code for long-term use are encouraged to review Storm concepts such as variables, methods, and control flow elements in more detail. Refer to the relevant sections of the Synapse User Guide or the Synapse Developer Guide for more information.

Tip

If you are creating guid forms with your ingest, we strongly recommend creating predictable / deconflictable guids; see the Storm Reference on type-specific behavior for tips on guid generation.

Example CSV Ingest

The Ingest Tool provides you with basic “sample code” as a guide to creating your Storm CSV ingest file:

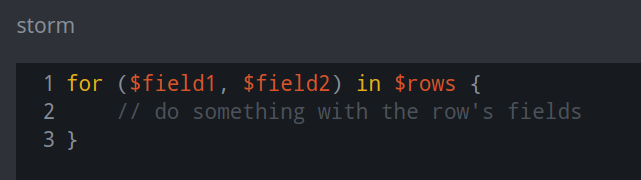

For CSV files:

$rowsis a variable that represents the set of rows in the CSV file.The variables in parentheses (

($field1, $field2)) represent each column in the CSV file. The “sample code” shows two variables; you will need to specify one variable for each column in your CSV.A for loop is used to iterate over each row in the CSV. Inside the for loop, your Storm should tell Synapse what to do with the data in each row.

Tip

In the for statement, you must create a variable for each column in your CSV file, even if you plan to ignore some columns when ingesting your data.

The variable is used to refer to the value in the column for a given row.

You can name the variables whatever you like. Most people find it helpful to use names that refer to the content of the column (e.g.,

$urlis more useful than$field4). If your CSV file has column headers, you can use these as variable names, though it is not required.

Sample CSV Data

For our sample CSV data, we used the CSV file associated with ClearSky Cyber Security’s report on Operation Wilted Tulip (the CSV is linked at the end of the article). The direct link to the CSV is:

https://www.clearskysec.com/wp-content/uploads/2017/07/indicators-wilted_tulip.csv

Note

Due to the way ClearSky handles HTTP requests for the link above, this particular CSV cannot be loaded into the Ingest Tool by specifying the URL. You will need to download and save the CSV locally and then upload it.

Sample CSV Ingest Code

// The variables $type and $value represent the two columns in the CSV

// Use a for loop to iterate over each row

for ($type, $value) in $rows {

// The CSV lists different kinds of indicators

// Use a 'switch' case to handle each differently based on the value

// in the 'type' column

switch $type {

// Make a URL / inet:url node

// Use 'edit try' (?=) to gracefully handle any bad data

'URL': { [ inet:url?=$value ] }

// Handle other kinds of data

'SSLCertificate': { [ hash:sha1?=$value ] }

'IPv4Address': { [ inet:ipv4?=$value ] }

'Filename': { [ file:base?=$value ] }

'Domain': { [ inet:fqdn?=$value ] }

'DNSName': { [ inet:fqdn?=$value ] }

// Value for a 'hash' in the CSV could be any of these; try each

'Hash': {

[

hash:md5?=$value

hash:sha1?=$value

hash:sha256?=$value

]

}

// Optional 'default' case to handle any types not listed above

// The CSV contains 'Detection name' items at the end - we did

// not account for those (though you could create it:av:signame nodes).

*: {

// Print warning for bad data

$lib.print("Type '{type}' not handled.",type=$type)

}

}

// End of 'switch' case

// Whatever node was created by the 'switch' statement is now in our pipeline

// Tag whatever we created to show that Clearsky associates the nodes with the

// 'Copy Kittens' threat group and operation 'Wilted Tulip'

[

+#rep.clearsky.copykittens

+#rep.clearsky.wiltedtulip

]

}

Example JSONL Ingest

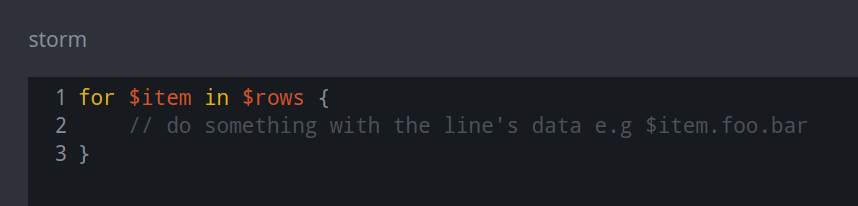

The Ingest Tool provides you with basic “sample code” as a guide to creating your Storm JSONL ingest file:

For JSONL files:

The Ingest Tool treats each newline-separated JSON object (each line in a JSONL file) as an individual row.

$rowsrefers to the set of “rows” (JSON lines).$itemrefers to an individual “row” (JSON line).A for loop is used to iterate over each JSON line. Inside the for loop, your Storm should tell Synapse what to do with the data in each JSON line.

Tip

You can reference name-value pairs within a JSON line relative to

$item. For example, if a JSON line contains the name-value"hurr" : "derp", you can reference it using$item.hurr.

Sample JSONL Data

For our sample JSONL data, we used a simple example set of Docker log data (referenced in this blog). The sample data is shown below:

{"log":"2018-12-20T08:53:57.879Z - info: [Main] The selected providers are digitalocean/nyc1\n","stream":"stdout","time":"2018-12-20T08:53:57.889924185Z"}

{"log":"2018-12-20T08:53:58.037Z - debug: [Main] listen\n","stream":"stderr","time":"2018-12-20T08:53:58.038505968Z"}

{"log":"2018-12-21T02:57:48.522Z - error: [Master] Error: request error from target (GET http://21.24.14.28/resources/images/banner5.png on instance 124726553@157.230.11.151:3128): Error: socket hang up\n","stream":"stderr","time":"2018-12-21T02:57:48.539460901Z"}

{"log":"2018-12-21T02:57:53.021Z - debug: [Pinger] ping: hostname=142.93.199.42 / port=3128\n","stream":"stderr","time":"2018-12-21T02:57:53.339146838Z"}

{"log":"2018-12-21T02:57:53.122Z - debug: [Manager] checkInstances\n","stream":"stderr","time":"2018-12-21T02:57:53.339161183Z"}

{"log":"Killed\n","stream":"stderr","time":"2018-12-21T02:58:19.048581795Z"}

Sample JSONL Ingest Code

// Various $lib.print / $lib.pprint statements for testing.

// These can be un-commented to view the values or removed

// if not needed.

// Each JSON line is treated as an individual row.

// $rows is the full set of data, $item is an individual row.

// The for loop processes each row ($item) in turn.

for $item in $rows {

// Use the data to make it:log:event nodes

// Define our variables

$data=$item

// $lib.pprint($item)

$mesg=$item.log

// $lib.print($mesg)

// Synapse time values support a max resolution of epoch millis.

// We'll use the full high-resolution timestamp from the log ($item.time)

// to create our guid, but we'll need a truncated value to set the :time

// property on the it:log:event node.

$time=$item.time

// $lib.print($time)

$millis=$item.time.slice(0,23)

// $lib.print($millis)

// These are guid nodes, so we need to make them deconflictable.

// We'll use a string representing our data source ('my_docker'),

// the log timestamp, and the log data to create unique but

// re-createable guids.

$guid=$lib.guid(my_docker,$time,$mesg)

// $lib.print($guid)

// Create it:log:event node and set basic props

[

it:log:event=$guid

:data=$data

:mesg=$mesg

:time=$millis

]

// Set 'severity' based on checking regex of log data

// This is kind of lame but ok for demo purposes

// Severity is an 'enum' property - values are stored

// as integers but can be set as either integers or strings,

// and are displayed as strings.

if ( $mesg~='debug' ) {

[ :severity=10 ]

}

elif ( $mesg~='info' ) {

[ :severity=20 ]

}

elif ( $mesg~='warning' ) {

[ :severity=40 ]

}

elif ( $mesg~='error' ) {

[ :severity=50 ]

}

else { }

// $severity=:severity

// $lib.print($severity)

// $lib.print($node)

// We want to use the Synapse 'scrape' command to parse the log data

// ( :mesg property) for any indicators such as hashes, IPs, URLs,

// servers, etc. and link them to the it:log:event node via 'refs'

// light edges.

| scrape :mesg --refs

}

Example JSON Ingest

The Ingest Tool provides you with basic “sample code” as a guide to creating your Storm JSON ingest file:

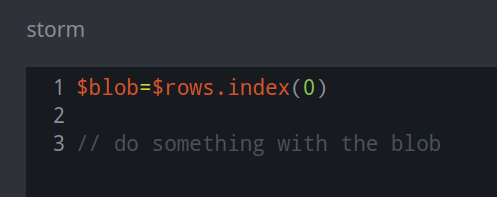

For JSON files:

The Ingest Tool treats a JSON object as a single “row” of data to load.

Similar to CSV and JSONL files,

$rowsrefers to the set of rows, although there is only one row in this case.$blobrefers to the entire JSON object, which is located at the start (index(0)) of the set of rows ($rows).

Tip

You can reference a JSON name-value pair by its “location” in

$rows.0. For example, the name-value pair"foo" : "bar"can be referenced using$rows.0.foo.Your JSON may contain arrays of objects. To process these objects using the Ingest tool, you can iterate through each object in the array (i.e., using a for loop).

To iterate over the objects in a JSON array named

"records":you can use the for loop expressionfor $item in $rows.0.records { <do stuff> }.You can reference name-value pairs within each object in the array relative to

$item. If a record object contains the name-value"hurr" : "derp", you can reference it using$item.hurr.

Sample JSON Data

For our sample JSON data, we used two different responses returned by PassiveTotal’s Community API for

passive dns (i.e., their /pt/v2/dns/passive endpoint). PassiveTotal allows you to query this

API for a domain, an IPv4 address, or an IPv6 address. The basic ingest file below is meant to handle

repsonses for either inet:ipv4 or inet:fqdn queries.

Sample JSON Response for IPv4 Query

{

"pager": null,

"queryValue": "67.225.140.4",

"queryType": "ip",

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-11 08:50:37",

"totalRecords": 3,

"results": [

{

"firstSeen": "2018-11-17 09:25:25",

"lastSeen": "2022-10-07 11:10:04",

"source": [

"riskiq"

],

"value": "67.225.140.4",

"collected": "2022-10-11 17:30:53",

"recordType": "A",

"resolve": "cyberloqapi.com",

"resolveType": "domain",

"recordHash": "bd01640f51eb68bace2b53880b52e4daf3b289e89cdc1ca2dfe8052ea867f82a"

},

{

"firstSeen": "2019-02-23 14:45:26",

"lastSeen": "2022-10-11 08:50:37",

"source": [

"riskiq",

"kaspersky"

],

"value": "67.225.140.4",

"collected": "2022-10-11 17:30:54",

"recordType": "A",

"resolve": "turnscor.com",

"resolveType": "domain",

"recordHash": "667f785648e057ed0acfc68b6f3f457e8c8acef19443c9a27fc85776e08dd37c"

},

{

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-11 06:46:40",

"source": [

"riskiq",

"kaspersky"

],

"value": "67.225.140.4",

"collected": "2022-10-11 17:30:54",

"recordType": "A",

"resolve": "bmglabtechusa.com",

"resolveType": "domain",

"recordHash": "0523d38581089ece536247b8421d35e06c4d8e59e191b81655e9ec9e9066258d"

}

]

}

Sample JSON Response for FQDN Query

{

"pager": null,

"queryValue": "bmglabtechusa.com",

"queryType": "domain",

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-12 09:25:17",

"totalRecords": 6,

"results": [

{

"firstSeen": "2010-09-09 09:29:11",

"lastSeen": "2022-10-12 09:25:17",

"source": [

"riskiq",

"pingly"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:17",

"recordType": "MX",

"resolve": "bmglabtechusa.com",

"resolveType": "domain",

"recordHash": "dd4cd62207578a72758c2897fa5b7dbb69bc6e7a7cfaadebc33b553dd8c14b90"

},

{

"firstSeen": "2014-11-02 21:22:06",

"lastSeen": "2022-10-12 09:25:17",

"source": [

"riskiq",

"pingly"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:17",

"recordType": "SOA",

"resolve": "admin@liquidweb.com",

"resolveType": "email",

"recordHash": "4092005907d52425e6db1eeb781283c758eaca205eef2998e6c4990ba32e1023"

},

{

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-11 06:46:40",

"source": [

"riskiq"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:16",

"recordType": "NS",

"resolve": "ns1.liquidweb.com",

"resolveType": "domain",

"recordHash": "eaa57520fa123223afea98ee676599c7472e2a08e23fd6ebab3d4ae3271da098"

},

{

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-12 09:25:17",

"source": [

"riskiq",

"pingly",

"mnemonic",

"kaspersky"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:17",

"recordType": "A",

"resolve": "67.225.140.4",

"resolveType": "ip",

"recordHash": "402bdd31d0cf9c37bfd2d905beb54d3c728cceec2333625c3e7dbaa7fa16f96c"

},

{

"firstSeen": "2010-08-02 08:42:09",

"lastSeen": "2022-10-11 06:46:40",

"source": [

"riskiq"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:16",

"recordType": "NS",

"resolve": "ns.liquidweb.com",

"resolveType": "domain",

"recordHash": "e46caf7dea9debc381d9a864a256d0e38d6576b1025ecce65ecbcf0835d3868d"

},

{

"firstSeen": "2014-11-02 21:22:06",

"lastSeen": "2022-10-04 15:01:28",

"source": [

"riskiq"

],

"value": "bmglabtechusa.com",

"collected": "2022-10-12 16:25:16",

"recordType": "SOA",

"resolve": "ns.liquidweb.com",

"resolveType": "domain",

"recordHash": "fb8e71931be4a340ea35b0c93b6b01da3aff307442ba177cd95adcc8a8d485fc"

}

]

}

Sample JSON Ingest Code

//Various 'print' statements embedded for testing.

// These can be un-commented to view the values or removed

// if not needed.

// A JSON blob is treated as a single 'CSV row' by the Ingest Tool

$blob=$rows.index(0)

//$lib.pprint($blob)

// Reference JSON elements with '$rows.0.<element>'

// Use a 'switch' case because we can query both IP and FQDN

switch $rows.0.queryType {

//Handle response data for IP / inet:ipv4

ip: {

// Set variable '$ip' to the value we queried

$ip=$rows.0.queryValue

// $lib.pprint($ip)

// We have an array of results, so we need to iterate over them

// Variable '$item' used to reference each result

for $item in $rows.0.results {

// Set variables so we can create an inet:dns:a node

// Reference names as $<variable>.<name> so $item.<name>

$first=$item.firstSeen

// $lib.pprint($first)

$last=$item.lastSeen

// $lib.pprint($last)

$fqdn=$item.resolve

// $lib.pprint($fqdn)

// Create the node

// Use 'edit try' (?=) to gracefully handle any bad data

[ inet:dns:a?=($fqdn,$ip) .seen?=($first,$last) ]

} // End of for loop

} // End of switch case for 'ip'

//Handle response data for domain / FQDN

domain: {

// Set variable $fqdn to the value we queried

$fqdn=$rows.0.queryValue

// $lib.pprint($fqdn)

// Iterate over results (as above)

for $item in $rows.0.results {

// Set variables (as above)

$first=$item.firstSeen

// $lib.pprint($first)

$last=$item.lastSeen

// $lib.pprint($last)

$value=$item.resolve

// $lib.pprint($value)

$type=$item.recordType

// $lib.pprint($type)

// We have to handle different kinds of DNS records

switch $item.recordType {

// For 'A' records, make an inet:dns:a node

// Use 'edit try' (?=) to gracefully handle any bad data

A: { [ inet:dns:a?=($fqdn,$value) .seen?=($first,$last) ] }

// Make other types of nodes depending on record type

MX: { [ inet:dns:mx?=($fqdn,$value) .seen?=($first,$last) ] }

NS: { [ inet:dns:ns?=($fqdn,$value) .seen?=($first,$last) ] }

// An SOA response may include a name server (FQDN) or email.

// SOA records are guid nodes - we want a "predicatable" guid.

// This allows us to re-ingest the same data without creating

// duplicate nodes. "Best practices" recommend creating the

// guid using a string / strings representing the source

// combined with one or more values guaranteed to be present

// in the record.

SOA: {

// Specify how to create the guid

$guid=$lib.guid(passivetotal,soa,$fqdn,$value)

// $lib.print($guid)

// Create inet:dns:soa node

[

inet:dns:soa=$guid

:fqdn?=$fqdn

:email?=$value

:ns?=$value

.seen?=($first,$last)

]

} // End of 'SOA' processing

} // End of switch case

// Check our work

// $dns=$node.ndef()

// $lib.print("Node is {dns}",dns=$dns)

} // End of for loop

} // End of 'domain' processing

} // End 'queryType' switch case